July 26th, 2024

Top 21 Large Language Models Revolutionizing AI in 2024

By Fahim Joharder · 8 min read

Are you overwhelmed by the sheer number of large language models flooding the AI market?

It's tough to know which ones are truly groundbreaking and which are just hype. Staying ahead of the curve in this rapidly evolving field feels like an impossible task.

But missing out on the latest advancements in language models means missing out on opportunities for innovation and growth. Imagine being left behind as your competitors harness the power of AI to streamline operations, enhance customer experiences, and drive revenue.

Don't worry. We've got you covered. In this comprehensive guide, we'll unveil the top 21 large language models revolutionizing AI in 2024.

Which Large Language Models are Leading the AI Revolution?

We'll delve into their unique features, capabilities, and the transformative impact they're having on various industries.

Whether you're a tech enthusiast, a business leader, or simply curious about the future of AI, this is your roadmap to understanding the most significant language models of our time.

1. GPT-4: The Multimodal Powerhouse

OpenAI's GPT-4 stands out with its impressive multimodal capabilities. It accepts both text and image inputs to generate creative and informative outputs. Its enhanced reasoning abilities and wider context window make it a top choice for various applications.

2. LaMDA: The Conversational AI Virtuoso

Google's LaMDA excels at natural and engaging conversations. Its ability to understand nuances in language and generate contextually relevant responses makes it ideal for chatbots and virtual assistants.

3. PaLM 2: Google's Next-Gen LLM

PaLM 2, Google's successor to PaLM, boasts improved multilingual, reasoning, and coding capabilities. Its potential applications span from translation services to scientific research.

4. LLaMA: Meta's Open-Source Contribution

Although not publicly available, Meta's LLaMA has made waves in the AI community due to its strong performance and relatively smaller model sizes. Its open-source nature could democratize access to powerful LLMs.

5. Claude: Anthropic's Safe and Helpful AI

Claude's core principles are safety and helpfulness. It aims to mitigate harmful or biased outputs, making it a reliable choice for applications where ethical considerations are paramount.

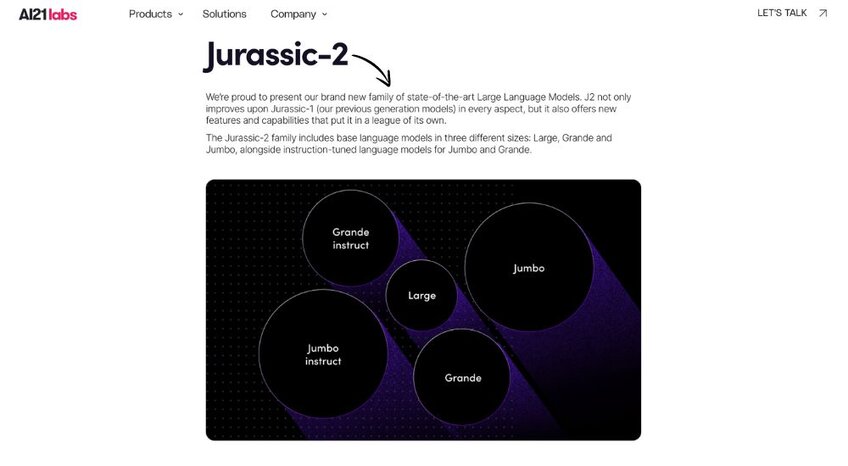

6. Jurassic-2: AI21 Labs' Multilingual Model

Jurassic-2 offers strong multilingual capabilities and impressive performance on a variety of tasks, from text generation to translation. Its versatility makes it a valuable tool for global businesses.

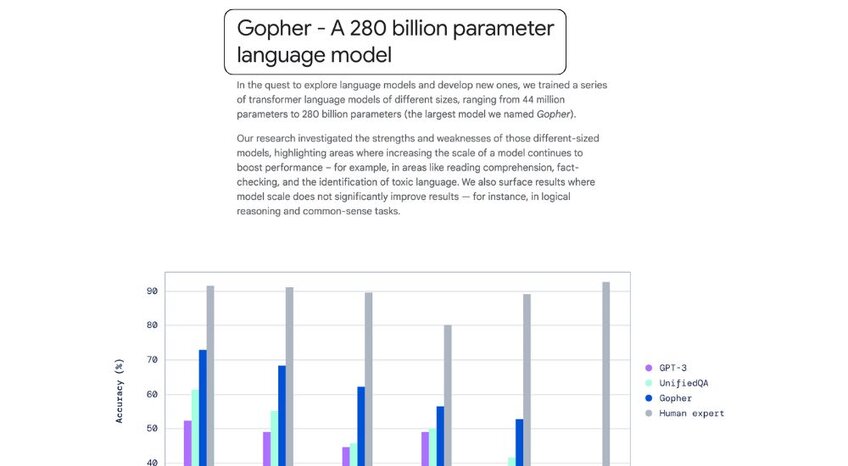

7. Gopher: DeepMind's Knowledge-Focused Model

Gopher is known for its extensive knowledge base, making it particularly adept at answering factual questions and providing detailed explanations. It's a valuable resource for research and education.

8. Chinchilla: DeepMind's Efficient Scaling Model

Chinchilla demonstrates that optimal model performance isn't just about size but also about the balance between model parameters and training data. Its efficiency could lead to more accessible LLMs.

9. Megatron-Turing NLG: A Collaboration Giant

Developed jointly by Microsoft and NVIDIA, Megatron-Turing NLG is one of the largest language models to date. Its immense size allows it to tackle complex language tasks with impressive accuracy.

10. BLOOM: The Multilingual Open Science Model

BLOOM is a multilingual language model trained on a massive dataset of 46 natural languages and 13 programming languages. Its open-source nature fosters collaboration and research in the AI community.

11. AlexaTM 20B: The Voice Assistant Powerhouse

Amazon's AlexaTM 20B is the engine behind the Alexa voice assistant. Its natural language understanding and text-to-speech capabilities make it a leader in voice AI.

12. M2M-100: Facebook's Multilingual Translation Model

M2M-100 is designed for direct translation between any pair of 100 languages without relying on English as an intermediary. It represents a significant advancement in multilingual communication.

13. GPT-Neo: EleutherAI's Open-Source Alternative

GPT-Neo, developed by EleutherAI, offers an open-source alternative to OpenAI's GPT models. It provides researchers and developers with a powerful tool to experiment with and build upon.

14. GPT-J: EleutherAI's 6 Billion Parameter Model

Another offering from EleutherAI, GPT-J, with its 6 billion parameters, demonstrates impressive capabilities in text generation and other language tasks.

15. Cohere: The Customizable AI Platform

Cohere offers a platform for building custom large language models tailored to specific business needs. This flexibility allows companies to leverage the power of AI in a way that aligns with their unique goals.

16. BERT: The Bidirectional Transformer Revolution

BERT, developed by Google, introduced the concept of bidirectional training for transformer models, significantly improving the understanding of language task context.

17. RoBERTa: Facebook's BERT Enhancement

Roberta builds upon BERT's architecture, utilizing a larger dataset and modified training techniques to achieve even better performance on various natural language processing benchmarks.

18. XLNet: The Permutation-Based Language Model

XLNet introduces a permutation-based training method that considers all possible word orderings during training, further enhancing the model's ability to capture long-range dependencies in text.

19. T5: Google's Text-to-Text Transfer Transformer

T5 frames all language tasks as text-to-text problems, allowing it to be applied to a wide range of applications, including translation, summarization, and question-answering.

20. ELECTRA: A More Efficient Pre-training Approach

ELECTRA proposes a more efficient pre-training approach that focuses on distinguishing between real and artificially generated text. This method has shown promising results in improving model performance with less computational cost.

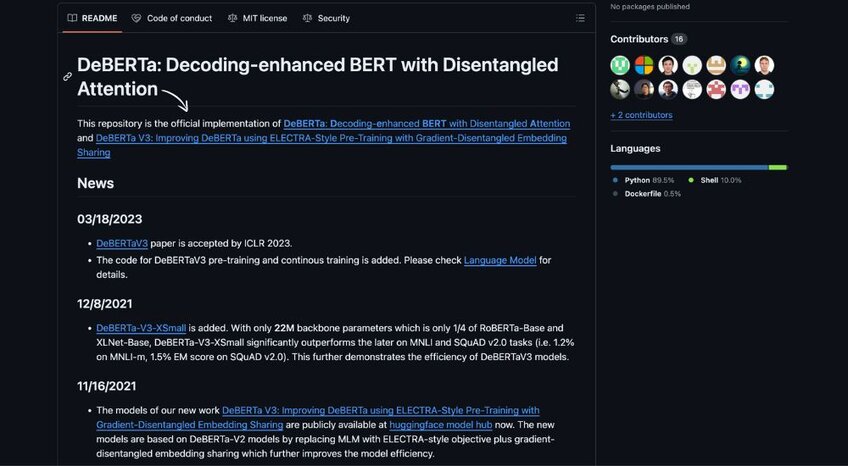

21. DeBERTa: Microsoft's Enhanced BERT Model

DeBERTa incorporates several architectural improvements over BERT, including disentangled attention and enhanced mask decoder. These modifications have led to state-of-the-art performance on various natural language understanding tasks.

Why are Large Language Models So Important?

Large language models are transforming the field of artificial intelligence, and for good reason.

At their core, these are sophisticated AI models trained on massive amounts of text data. They leverage the power of machine learning and transformer architectures to understand and generate human-like text, enabling a wide array of applications.

From complex chatbots that can hold meaningful conversations and tools that generate creative content to simple AI-assisted study platforms, large language models are pushing the boundaries of what's possible with AI.

Some of the best large language models, like GPT-4 and LaMDA, have become foundation models, meaning they serve as a basis for building other AI applications.

These models are not just tools; they're catalysts for innovation, driving advancements in natural language processing and reshaping industries worldwide, including medicine.

Understanding how large language models work is key to unlocking their full potential and staying ahead in the ever-evolving world of AI.

Final Thoughts

The world of large language models is a dynamic and ever-evolving landscape, with new AI models emerging and existing ones being constantly fine-tuned.

It's clear that these models, whether they utilize bidirectional encoder representations or other architectures, have the potential to reshape industries, redefine communication, and revolutionize how we interact with information.

However, maintaining large language models and ensuring their ethical use remains a critical challenge. As we navigate this exciting frontier of artificial intelligence, it's crucial to stay informed, adapt, and embrace the transformative power of large language model meta.

The future of AI is here, and it's being written one word at a time.

Frequently Asked Questions

What is a transformer model in the context of large language models?

A transformer model is a neural network architecture that revolutionized natural language processing. It uses self-attention mechanisms to weigh the importance of different words in a sentence, enabling it to understand context and long-range dependencies better. This makes transformer models a cornerstone of many large language models today.

How do you maintain large language models?

Maintaining large language models involves regular updates and fine-tuning. This includes monitoring performance, addressing biases, and incorporating new data to improve accuracy and relevance. It's a continuous process to ensure the model stays up-to-date and reliable.

What is the significance of fine-tuning in large language models?

Fine-tuning is a crucial step in adapting a pre-trained large language model to a specific task or domain. It involves further training the model on a smaller, more targeted dataset to enhance its performance in that particular area. This allows for greater customization and accuracy in specific applications.

How are large language models changing the way we interact with technology?

Large language models are transforming human-computer interaction by enabling more natural and intuitive communication. They power conversational AI, content generation tools, and personalized recommendations, making technology more accessible and user-friendly.

What are the ethical considerations surrounding the use of large language models?

Ethical concerns include the potential for bias in the training data, the generation of misleading or harmful content, and the impact on jobs and society. Addressing these issues is essential for the responsible and beneficial development of large language models.

Author

Author: Fahim Joharder

Fahim is a software and AI specialist with diverse interests, including travel, kayaking, and golf. He is a dedicated professional who also prioritizes family life. Fahim's well-rounded perspective allows him to bring a unique approach to his work in technology.

Website: www.paddlestorm.com

Linkedin: https://www.linkedin.com/in/fahimjoharder/

Twitter: https://twitter.com/fahimjoharder